Getting Started with PyTorch Lightning

Welcome to the exciting world of PyTorch Lightning! If you’re a data scientist or machine learning enthusiast, chances are you’ve heard of PyTorch, the popular open-source machine learning library. But have you heard of its Lightning counterpart?

PyTorch Lightning is a lightweight framework that sits on top of PyTorch and aims to make the training process more streamlined and efficient. It provides a set of abstractions and utilities that allow you to focus on the high-level aspects of your model, while it takes care of the low-level details of the training process.

Think of it as a superhero sidekick to PyTorch. PyTorch Lightning will be your Robin, taking care of all the grunt work while you focus on your Batman-level model-building skills. With PyTorch Lightning, you can enjoy faster prototyping, more concise code, and seamless scaling.

But don’t just take our word for it. In this blog post, we’ll walk you through the basics of PyTorch Lightning and get you started on building your first model. From installation to advanced features, we’ve got you covered. So put on your cape, grab your utility belt, and let’s get started with PyTorch Lightning!

Installing PyTorch Lightning

Installing PyTorch Lightning is a straightforward process, and there are a couple of different ways you can do it depending on your setup. Here’s a step-by-step guide to getting you started:

Firstly, you need to make sure you have PyTorch installed on your system. If you haven’t already installed it, you can do so by following the instructions on the PyTorch website.

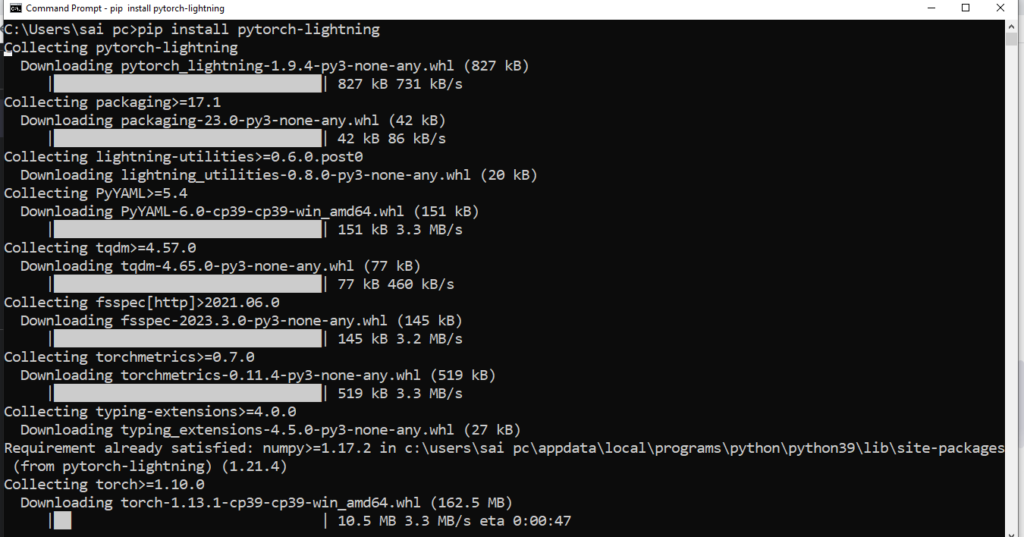

Once you have PyTorch installed, you can use either pip or conda to install PyTorch Lightning. To use pip, open up your terminal or command prompt and enter the following command:

pip install pytorch-lightning

and Click on enter button.

If you prefer to use conda, you can enter the following command instead:

conda install pytorch-lightning -c conda-forge

Once the installation process is complete, you can verify that PyTorch Lightning has been installed correctly by importing it in a Python script or the Python console:

import pytorch_lightning as pl

If you encounter any issues during the installation process, there are a few things you can try. First, make sure you have the correct version of PyTorch installed. You can check the compatibility matrix on the PyTorch Lightning website to make sure you have the right version.

If you’re still having issues, try uninstalling and reinstalling PyTorch Lightning, or checking the PyTorch Lightning documentation for troubleshooting tips.

Overall, the process of installing PyTorch Lightning is relatively simple and shouldn’t take you more than a few minutes. So go ahead and get started with PyTorch Lightning!

PyTorch Lightning Components

PyTorch Lightning is made up of three core components that work together to make the training process more streamlined and efficient: the LightningModule, the Trainer, and the DataModule.

LightningModule

The LightningModule is the backbone of PyTorch Lightning. It encapsulates your model architecture and training logic, making it easy to separate out the different components of your model and keep your code organized. By defining your model in a LightningModule, you can take advantage of prebuilt functions for common tasks like forward and backward passes, and easily add in custom functions as needed.

Trainer

The Trainer is responsible for managing the training process. It takes care of tasks like setting up the optimizer, calculating gradients, and saving checkpoints, so you don’t have to worry about them. You can customize the Trainer to suit your needs, for example, setting the number of epochs or the batch size, and you can monitor the training progress with metrics like loss and accuracy.

DataModule

The DataModule is responsible for loading and preparing your data for training. It helps you organize your data into train, validation, and test sets, and can also handle data augmentation and preprocessing. By using a DataModule, you can streamline the data preparation process and reduce the amount of code needed to load and transform your data.

By combining these three components, PyTorch Lightning simplifies the training process and allows you to focus on the high-level aspects of your model. Whether you’re working on a small project or a large-scale production system, PyTorch Lightning’s components can help you build and train your models with ease.

Building a Simple Model with PyTorch Lightning

Building a simple model with PyTorch Lightning is a great way to get started with the framework. In this example, we’ll walk through the process of building a simple neural network to classify handwritten digits from the MNIST dataset.

First, we’ll define our LightningModule. This will include the architecture of our model and the forward method, which takes in an input image and returns the predicted class. We can also include any custom functions or layers we need.

Next, we’ll define our DataModule, which will handle loading and preparing the MNIST dataset. We can use PyTorch’s built-in DataLoader class to load the data and PyTorch Lightning’s transform functions to apply any necessary data augmentation.

Finally, we’ll instantiate the Trainer and pass in our LightningModule and DataModule. We can customize the training process by setting hyperparameters like the number of epochs, learning rate, and batch size.

Once we’ve set everything up, we can start training our model by calling the fit method on the Trainer. PyTorch Lightning will take care of the rest, including calculating gradients, optimizing weights, and logging metrics like loss and accuracy.

Overall, building a simple model with PyTorch Lightning is a straightforward process that can be easily customized to suit your needs. Whether you’re a beginner or an experienced data scientist, PyTorch Lightning’s streamlined components make building and training models a breeze.

Advanced Features of PyTorch Lightning

PyTorch Lightning has a wide range of advanced features that can help you build and train complex machine-learning models with ease. Here are some of the key features of PyTorch Lightning that you can use to take your machine-learning projects to the next level:

Distributed training:

PyTorch Lightning makes it easy to scale your model training across multiple GPUs or even multiple machines using the built-in distributed training functionality.

Callbacks:

Callbacks are a powerful tool in PyTorch Lightning that allow you to customize the training process by adding your own custom functions at different stages of the training loop. This can be useful for things like early stopping, model checkpointing, and logging.

Transfer learning:

PyTorch Lightning makes it easy to leverage pre-trained models for transfer learning, by allowing you to freeze and unfreeze specific layers of the model and fine-tune them for your specific use case.

Hyperparameter tuning:

PyTorch Lightning integrates with tools like Optuna and Ray Tune to make hyperparameter tuning a breeze. You can easily search over different hyperparameter configurations to find the optimal combination for your model.

Profiling and debugging:

PyTorch Lightning comes with built-in tools for profiling and debugging your model, which can be incredibly helpful for identifying performance bottlenecks or debugging issues during training.

Overall, PyTorch Lightning‘s advanced features make it a powerful tool for building and training complex machine-learning models. By leveraging these features, you can take your projects to the next level and achieve state-of-the-art performance on a wide range of tasks.

Conclusion

In conclusion, PyTorch Lightning is an excellent framework for building and training machine learning models. Its intuitive API, powerful abstractions, and advanced features make it a popular choice for both beginners and experienced practitioners.

With PyTorch Lightning, you can focus on the high-level aspects of your model and let the framework handle the low-level details of the training process. This allows you to build and train complex models more efficiently, while also making it easier to experiment with different architectures and hyperparameter configurations.

Overall, PyTorch Lightning is a valuable tool in the machine learning toolkit, and its popularity continues to grow as more and more developers discover its benefits. Whether you’re a seasoned machine learning practitioner or just getting started in the field, PyTorch Lightning is definitely worth exploring. We hope this article has provided you with a solid foundation for getting started with PyTorch Lightning, and we encourage you to continue exploring the framework and all it has to offer.